Causal Discovery example#

The goal of this notebook is to show how causal discovery methods can work with DoWhy. We use discovery methods from causal-learn repo. As we will see, causal discovery methods require appropriate assumptions for the correctness guarantees, and thus there will be variance across results returned by different methods in practice. These methods, however, may be combined usefully with domain knowledge to construct the final causal graph.

[1]:

import dowhy

from dowhy import CausalModel

import numpy as np

import pandas as pd

import graphviz

import networkx as nx

np.set_printoptions(precision=3, suppress=True)

np.random.seed(0)

Utility function#

We define a utility function to draw the directed acyclic graph.

[2]:

def make_graph(adjacency_matrix, labels=None):

idx = np.abs(adjacency_matrix) > 0.01

dirs = np.where(idx)

d = graphviz.Digraph(engine='dot')

names = labels if labels else [f'x{i}' for i in range(len(adjacency_matrix))]

for name in names:

d.node(name)

for to, from_, coef in zip(dirs[0], dirs[1], adjacency_matrix[idx]):

d.edge(names[from_], names[to], label=str(coef))

return d

def str_to_dot(string):

'''

Converts input string from graphviz library to valid DOT graph format.

'''

graph = string.strip().replace('\n', ';').replace('\t','')

graph = graph[:9] + graph[10:-2] + graph[-1] # Removing unnecessary characters from string

return graph

Experiments on the Auto-MPG dataset#

In this section, we will use a dataset on the technical specification of cars. The dataset is downloaded from UCI Machine Learning Repository. The dataset contains 9 attributes and 398 instances. We do not know the true causal graph for the dataset and will use causal-learn to discover it. The causal graph obtained will then be used to estimate the causal effect.

1. Load the data#

[3]:

# Load a modified version of the Auto MPG data: Quinlan,R.. (1993). Auto MPG. UCI Machine Learning Repository. https://doi.org/10.24432/C5859H.

data_mpg = pd.read_csv("datasets/auto_mpg.csv", index_col=0)

print(data_mpg.shape)

data_mpg.head()

(392, 6)

[3]:

| mpg | cylinders | displacement | horsepower | weight | acceleration | |

|---|---|---|---|---|---|---|

| 0 | 18.0 | 8.0 | 307.0 | 130.0 | 3504.0 | 12.0 |

| 1 | 15.0 | 8.0 | 350.0 | 165.0 | 3693.0 | 11.5 |

| 2 | 18.0 | 8.0 | 318.0 | 150.0 | 3436.0 | 11.0 |

| 3 | 16.0 | 8.0 | 304.0 | 150.0 | 3433.0 | 12.0 |

| 4 | 17.0 | 8.0 | 302.0 | 140.0 | 3449.0 | 10.5 |

Causal Discovery with causal-learn#

We use the causal-learn library to perform causal discovery on the Auto-MPG dataset. We use three methods for causal discovery here: PC, GES, and LiNGAM. These methods are widely used and do not take much time to run. Hence, these are ideal for an introduction to the topic. Causal-learn provides a comprehensive list of well-tested causal-discovery methods, and readers are welcome to explore.

The documentation for the methods used are as follows:

More methods could be found in the causal-learn documentation [link].

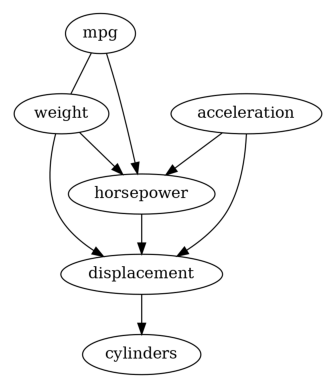

We first try the PC algorithm with default parameters.

[4]:

from causallearn.search.ConstraintBased.PC import pc

labels = [f'{col}' for i, col in enumerate(data_mpg.columns)]

data = data_mpg.to_numpy()

cg = pc(data)

# Visualization using pydot

from causallearn.utils.GraphUtils import GraphUtils

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

import io

pyd = GraphUtils.to_pydot(cg.G, labels=labels)

tmp_png = pyd.create_png(f="png")

fp = io.BytesIO(tmp_png)

img = mpimg.imread(fp, format='png')

plt.axis('off')

plt.imshow(img)

plt.show()

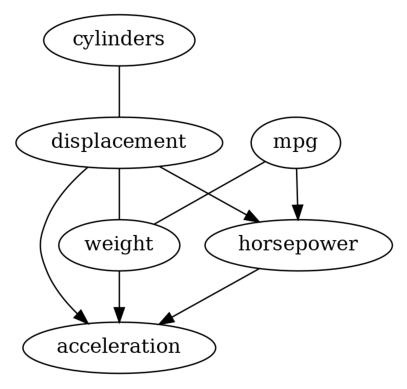

Then we have a causal graph discovered by PC. Let us also try GES to see its result.

[5]:

from causallearn.search.ScoreBased.GES import ges

# default parameters

Record = ges(data)

# Visualization using pydot

from causallearn.utils.GraphUtils import GraphUtils

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

import io

pyd = GraphUtils.to_pydot(Record['G'], labels=labels)

tmp_png = pyd.create_png(f="png")

fp = io.BytesIO(tmp_png)

img = mpimg.imread(fp, format='png')

plt.axis('off')

plt.imshow(img)

plt.show()

Well, these two results are different, which is not rare when applying causal discovery on real-world dataset, since the required assumptions on the data-generating process are hard to verify.

In addition, the graphs returned by PC and GES are CPDAGs instead of DAGs, so it is possible to have undirected edges (e.g., the result returned by GES). Thus, causal effect estimataion is difficult for those methods, since there may be absence of backdoor, instrumental or frontdoor variables. In order to get a DAG, we decide to try LiNGAM on our dataset.

[6]:

from causallearn.search.FCMBased import lingam

model_lingam = lingam.ICALiNGAM()

model_lingam.fit(data)

from causallearn.search.FCMBased.lingam.utils import make_dot

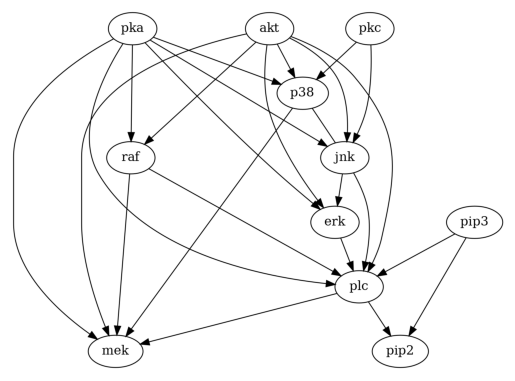

make_dot(model_lingam.adjacency_matrix_, labels=labels)

[6]:

Now we have a DAG and are ready to estimate the causal effects based on that.

Estimate causal effects using Linear Regression#

Now let us see the estimate of causal effect of mpg on weight.

[7]:

# Obtain valid dot format

graph_dot = make_graph(model_lingam.adjacency_matrix_, labels=labels)

# Define Causal Model

model=CausalModel(

data = data_mpg,

treatment='mpg',

outcome='weight',

graph=str_to_dot(graph_dot.source))

# Identification

identified_estimand = model.identify_effect(proceed_when_unidentifiable=True)

print(identified_estimand)

# Estimation

estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.linear_regression",

control_value=0,

treatment_value=1,

confidence_intervals=True,

test_significance=True)

print("Causal Estimate is " + str(estimate.value))

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

──────(E[weight|cylinders])

d[mpg]

Estimand assumption 1, Unconfoundedness: If U→{mpg} and U→weight then P(weight|mpg,cylinders,U) = P(weight|mpg,cylinders)

### Estimand : 2

Estimand name: iv

No such variable(s) found!

### Estimand : 3

Estimand name: frontdoor

No such variable(s) found!

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)

/home/runner/work/dowhy/dowhy/dowhy/causal_estimator.py:272: FutureWarning: DataFrameGroupBy.apply operated on the grouping columns. This behavior is deprecated, and in a future version of pandas the grouping columns will be excluded from the operation. Either pass `include_groups=False` to exclude the groupings or explicitly select the grouping columns after groupby to silence this warning.

conditional_estimates = by_effect_mods.apply(estimate_effect_fn)