Conditional Average Treatment Effects (CATE) with DoWhy and EconML#

This is an experimental feature where we use EconML methods from DoWhy. Using EconML allows CATE estimation using different methods.

All four steps of causal inference in DoWhy remain the same: model, identify, estimate, and refute. The key difference is that we now call econml methods in the estimation step. There is also a simpler example using linear regression to understand the intuition behind CATE estimators.

All datasets are generated using linear structural equations.

[1]:

%load_ext autoreload

%autoreload 2

[2]:

import numpy as np

import pandas as pd

import logging

import dowhy

from dowhy import CausalModel

import dowhy.datasets

import econml

import warnings

warnings.filterwarnings('ignore')

BETA = 10

[3]:

data = dowhy.datasets.linear_dataset(BETA, num_common_causes=4, num_samples=10000,

num_instruments=2, num_effect_modifiers=2,

num_treatments=1,

treatment_is_binary=False,

num_discrete_common_causes=2,

num_discrete_effect_modifiers=0,

one_hot_encode=False)

df=data['df']

print(df.head())

print("True causal estimate is", data["ate"])

X0 X1 Z0 Z1 W0 W1 W2 W3 v0 \

0 -1.020238 0.590365 1.0 0.880679 -1.566900 1.596776 0 1 25.058953

1 0.000471 -0.327323 1.0 0.852780 -1.644666 -1.154112 0 1 9.292373

2 1.416555 0.555931 1.0 0.316299 -1.532908 1.404353 0 2 20.092126

3 2.831842 -1.415962 0.0 0.466932 -0.461832 -0.629515 3 3 15.024105

4 3.860574 2.695878 1.0 0.530751 0.304898 2.154185 3 2 41.320310

y

0 232.905550

1 80.663324

2 281.066552

3 199.431572

4 972.931910

True causal estimate is 11.485931318618377

[4]:

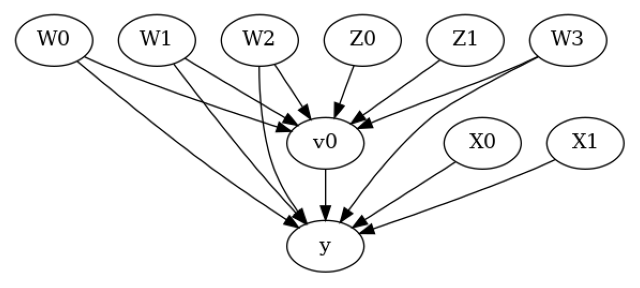

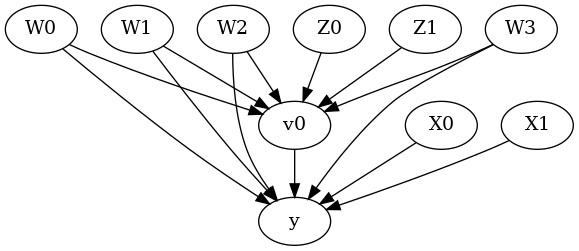

model = CausalModel(data=data["df"],

treatment=data["treatment_name"], outcome=data["outcome_name"],

graph=data["gml_graph"])

[5]:

model.view_model()

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

[6]:

identified_estimand= model.identify_effect(proceed_when_unidentifiable=True)

print(identified_estimand)

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W2,W1,U) = P(y|v0,W0,W3,W2,W1)

### Estimand : 2

Estimand name: iv

Estimand expression:

⎡ -1⎤

⎢ d ⎛ d ⎞ ⎥

E⎢─────────(y)⋅⎜─────────([v₀])⎟ ⎥

⎣d[Z₁ Z₀] ⎝d[Z₁ Z₀] ⎠ ⎦

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

### Estimand : 3

Estimand name: frontdoor

No such variable(s) found!

Linear Model#

First, let us build some intuition using a linear model for estimating CATE. The effect modifiers (that lead to a heterogeneous treatment effect) can be modeled as interaction terms with the treatment. Thus, their value modulates the effect of treatment.

Below the estimated effect of changing treatment from 0 to 1.

[7]:

linear_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.linear_regression",

control_value=0,

treatment_value=1)

print(linear_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W2,W1,U) = P(y|v0,W0,W3,W2,W1)

## Realized estimand

b: y~v0+W0+W3+W2+W1+v0*X1+v0*X0

Target units:

## Estimate

Mean value: 11.485662684843657

### Conditional Estimates

__categorical__X1 __categorical__X0

(-3.324, -0.116] (-3.8729999999999998, -0.844] 5.740070

(-0.844, -0.263] 7.534507

(-0.263, 0.238] 8.555222

(0.238, 0.8] 9.563993

(0.8, 3.861] 11.298972

(-0.116, 0.486] (-3.8729999999999998, -0.844] 7.721940

(-0.844, -0.263] 9.382385

(-0.263, 0.238] 10.371832

(0.238, 0.8] 11.397311

(0.8, 3.861] 13.090825

(0.486, 0.989] (-3.8729999999999998, -0.844] 8.798377

(-0.844, -0.263] 10.482438

(-0.263, 0.238] 11.472269

(0.238, 0.8] 12.557162

(0.8, 3.861] 14.102801

(0.989, 1.57] (-3.8729999999999998, -0.844] 9.942512

(-0.844, -0.263] 11.523979

(-0.263, 0.238] 12.570343

(0.238, 0.8] 13.611363

(0.8, 3.861] 15.252642

(1.57, 5.379] (-3.8729999999999998, -0.844] 11.833480

(-0.844, -0.263] 13.301856

(-0.263, 0.238] 14.442518

(0.238, 0.8] 15.411161

(0.8, 3.861] 17.191771

dtype: float64

EconML methods#

We now move to the more advanced methods from the EconML package for estimating CATE.

First, let us look at the double machine learning estimator. Method_name corresponds to the fully qualified name of the class that we want to use. For double ML, it is “econml.dml.DML”.

Target units defines the units over which the causal estimate is to be computed. This can be a lambda function filter on the original dataframe, a new Pandas dataframe, or a string corresponding to the three main kinds of target units (“ate”, “att” and “atc”). Below we show an example of a lambda function.

Method_params are passed directly to EconML. For details on allowed parameters, refer to the EconML documentation.

[8]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DML",

control_value = 0,

treatment_value = 1,

target_units = lambda df: df["X0"]>1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=False)},

"fit_params":{}})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W2,W1,U) = P(y|v0,W0,W3,W2,W1)

## Realized estimand

b: y~v0+W0+W3+W2+W1 | X1,X0

Target units: Data subset defined by a function

## Estimate

Mean value: 14.51951009651247

Effect estimates: [[13.94752361]

[12.56585253]

[23.36581262]

...

[17.43976473]

[14.47873079]

[ 8.93944387]]

[9]:

print("True causal estimate is", data["ate"])

True causal estimate is 11.485931318618377

[10]:

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DML",

control_value = 0,

treatment_value = 1,

target_units = 1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W2,W1,U) = P(y|v0,W0,W3,W2,W1)

## Realized estimand

b: y~v0+W0+W3+W2+W1 | X1,X0

Target units:

## Estimate

Mean value: 11.424345202222062

Effect estimates: [[ 9.09750376]

[ 9.25683681]

[13.9543803 ]

...

[ 8.71707597]

[13.22902116]

[ 8.25011555]]

CATE Object and Confidence Intervals#

EconML provides its own methods to compute confidence intervals. Using BootstrapInference in the example below.

[11]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

from econml.inference import BootstrapInference

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DML",

target_units = "ate",

confidence_intervals=True,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final": LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{

'inference': BootstrapInference(n_bootstrap_samples=100, n_jobs=-1),

}

})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W2,W1,U) = P(y|v0,W0,W3,W2,W1)

## Realized estimand

b: y~v0+W0+W3+W2+W1 | X1,X0

Target units: ate

## Estimate

Mean value: 11.425685565894034

Effect estimates: [[ 9.15564041]

[ 9.2537945 ]

[13.87373819]

...

[ 8.75872548]

[13.25527175]

[ 8.25492472]]

95.0% confidence interval: [[[ 9.10838748 9.21923951 13.8992338 ... 8.71054937 13.27473674

8.17519823]]

[[ 9.31433906 9.39554548 14.1624217 ... 8.8964614 13.49674624

8.39994617]]]

Can provide a new inputs as target units and estimate CATE on them.#

[12]:

test_cols= data['effect_modifier_names'] # only need effect modifiers' values

test_arr = [np.random.uniform(0,1, 10) for _ in range(len(test_cols))] # all variables are sampled uniformly, sample of 10

test_df = pd.DataFrame(np.array(test_arr).transpose(), columns=test_cols)

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DML",

target_units = test_df,

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}

})

print(dml_estimate.cate_estimates)

[[11.75631911]

[11.65133902]

[13.0289313 ]

[11.98334045]

[12.66140049]

[13.13347342]

[11.71395507]

[11.87373649]

[11.85647526]

[11.49815093]]

Can also retrieve the raw EconML estimator object for any further operations#

[13]:

print(dml_estimate._estimator_object)

<econml.dml.dml.DML object at 0x7f555b0e7700>

Works with any EconML method#

In addition to double machine learning, below we example analyses using orthogonal forests, DRLearner (bug to fix), and neural network-based instrumental variables.

Binary treatment, Binary outcome#

[14]:

data_binary = dowhy.datasets.linear_dataset(BETA, num_common_causes=4, num_samples=10000,

num_instruments=1, num_effect_modifiers=2,

treatment_is_binary=True, outcome_is_binary=True)

# convert boolean values to {0,1} numeric

data_binary['df'].v0 = data_binary['df'].v0.astype(int)

data_binary['df'].y = data_binary['df'].y.astype(int)

print(data_binary['df'])

model_binary = CausalModel(data=data_binary["df"],

treatment=data_binary["treatment_name"], outcome=data_binary["outcome_name"],

graph=data_binary["gml_graph"])

identified_estimand_binary = model_binary.identify_effect(proceed_when_unidentifiable=True)

X0 X1 Z0 W0 W1 W2 W3 v0 y

0 2.513692 -0.949739 1.0 1.444644 -0.015898 -0.506672 -0.204757 1 1

1 1.948241 -1.111496 0.0 -0.102631 1.532169 0.898939 -1.515319 0 0

2 2.272320 1.190890 1.0 1.206555 -1.127297 -0.661477 -0.917829 1 1

3 1.575488 -0.901774 1.0 0.873994 0.139044 -0.188861 2.690220 1 1

4 2.343328 -2.681281 1.0 1.166733 -2.202804 1.902970 0.761191 1 1

... ... ... ... ... ... ... ... .. ..

9995 3.392957 -0.777179 0.0 1.484418 -1.103265 -0.925810 0.144994 0 1

9996 1.038491 -0.125780 1.0 1.249530 0.120870 0.671930 -0.472931 1 1

9997 1.162608 -0.350567 0.0 2.143919 -0.149828 -0.166978 -0.780778 0 1

9998 0.041927 -0.525199 0.0 0.052121 -0.003352 -0.209708 -0.167241 1 1

9999 -0.607472 -0.971756 0.0 1.442778 -1.733215 0.077009 0.422101 0 1

[10000 rows x 9 columns]

Using DRLearner estimator#

[15]:

from sklearn.linear_model import LogisticRegressionCV

#todo needs binary y

drlearner_estimate = model_binary.estimate_effect(identified_estimand_binary,

method_name="backdoor.econml.dr.LinearDRLearner",

confidence_intervals=False,

method_params={"init_params":{

'model_propensity': LogisticRegressionCV(cv=3, solver='lbfgs', multi_class='auto')

},

"fit_params":{}

})

print(drlearner_estimate)

print("True causal estimate is", data_binary["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W2,W1,U) = P(y|v0,W0,W3,W2,W1)

## Realized estimand

b: y~v0+W0+W3+W2+W1 | X1,X0

Target units: ate

## Estimate

Mean value: 0.39736027177781136

Effect estimates: [[0.4679193 ]

[0.42023331]

[0.56337141]

...

[0.40563726]

[0.31877685]

[0.25034381]]

True causal estimate is 0.3634

Instrumental Variable Method#

[16]:

dmliv_estimate = model.estimate_effect(identified_estimand,

method_name="iv.econml.iv.dml.DMLIV",

target_units = lambda df: df["X0"]>-1,

confidence_intervals=False,

method_params={"init_params":{

'discrete_treatment':False,

'discrete_instrument':False

},

"fit_params":{}})

print(dmliv_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: iv

Estimand expression:

⎡ -1⎤

⎢ d ⎛ d ⎞ ⎥

E⎢─────────(y)⋅⎜─────────([v₀])⎟ ⎥

⎣d[Z₁ Z₀] ⎝d[Z₁ Z₀] ⎠ ⎦

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

## Realized estimand

b: y~v0+W0+W3+W2+W1 | X1,X0

Target units: Data subset defined by a function

## Estimate

Mean value: 12.097825287082701

Effect estimates: [[ 9.35716809]

[13.98987901]

[12.68238683]

...

[ 8.85593969]

[13.35699246]

[ 8.35671364]]

Metalearners#

[17]:

data_experiment = dowhy.datasets.linear_dataset(BETA, num_common_causes=5, num_samples=10000,

num_instruments=2, num_effect_modifiers=5,

treatment_is_binary=True, outcome_is_binary=False)

# convert boolean values to {0,1} numeric

data_experiment['df'].v0 = data_experiment['df'].v0.astype(int)

print(data_experiment['df'])

model_experiment = CausalModel(data=data_experiment["df"],

treatment=data_experiment["treatment_name"], outcome=data_experiment["outcome_name"],

graph=data_experiment["gml_graph"])

identified_estimand_experiment = model_experiment.identify_effect(proceed_when_unidentifiable=True)

X0 X1 X2 X3 X4 Z0 Z1 \

0 -0.301678 -2.455302 -0.739204 -0.218813 -0.069734 0.0 0.426942

1 -0.369898 -1.716193 0.179661 -1.427303 1.697845 0.0 0.301589

2 -1.465097 -0.662092 -0.567272 0.641881 1.089975 0.0 0.160968

3 0.716540 0.276498 0.026588 -1.154345 1.390566 0.0 0.132511

4 -1.119177 -1.707515 -0.656426 -1.832652 -0.144310 0.0 0.934839

... ... ... ... ... ... ... ...

9995 0.285096 0.497896 -0.982330 -0.323736 0.468448 0.0 0.406000

9996 2.278117 -1.037574 -1.241589 -1.004553 1.355427 0.0 0.286527

9997 -1.236976 0.029614 -0.866352 0.439351 1.525078 0.0 0.054115

9998 1.454419 -1.183015 -1.451662 0.914016 1.224927 0.0 0.911403

9999 -2.924473 -0.453069 0.099117 1.443503 1.448285 0.0 0.408617

W0 W1 W2 W3 W4 v0 y

0 0.333396 1.147279 -1.630514 0.316371 -0.356632 1 0.128093

1 1.035610 -0.175915 1.879343 0.025013 -0.144187 1 19.201289

2 1.504647 2.104735 0.166122 -1.549324 -0.161518 1 11.313626

3 1.311252 0.186016 0.902487 1.110130 0.933304 1 29.859807

4 1.560982 -0.338660 0.095378 -2.362832 -1.413930 1 -10.423972

... ... ... ... ... ... .. ...

9995 -0.432827 -0.665251 0.689354 -0.008761 -1.096496 1 8.170760

9996 1.106290 2.005316 -1.421486 -0.313084 -1.958064 0 -10.338037

9997 0.886274 -0.074640 2.195251 -0.312669 -1.773011 1 15.744006

9998 -0.185202 0.165817 -0.920899 -1.277211 -0.511828 1 7.291146

9999 -0.037033 2.095833 0.078869 -0.398338 -0.615187 1 11.485524

[10000 rows x 14 columns]

[18]:

from sklearn.ensemble import RandomForestRegressor

metalearner_estimate = model_experiment.estimate_effect(identified_estimand_experiment,

method_name="backdoor.econml.metalearners.TLearner",

confidence_intervals=False,

method_params={"init_params":{

'models': RandomForestRegressor()

},

"fit_params":{}

})

print(metalearner_estimate)

print("True causal estimate is", data_experiment["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W4,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W4,W2,W1,U) = P(y|v0,W0,W3,W4,W2,W1)

## Realized estimand

b: y~v0+X1+X4+X2+X3+X0+W0+W3+W4+W2+W1

Target units: ate

## Estimate

Mean value: 16.007464813917412

Effect estimates: [[ 6.21811436]

[19.87636774]

[17.05098844]

...

[23.95414995]

[17.57187607]

[17.17127926]]

True causal estimate is 12.180194317871324

Avoiding retraining the estimator#

Once an estimator is fitted, it can be reused to estimate effect on different data points. In this case, you can pass fit_estimator=False to estimate_effect. This works for any EconML estimator. We show an example for the T-learner below.

[19]:

# For metalearners, need to provide all the features (except treatmeant and outcome)

metalearner_estimate = model_experiment.estimate_effect(identified_estimand_experiment,

method_name="backdoor.econml.metalearners.TLearner",

confidence_intervals=False,

fit_estimator=False,

target_units=data_experiment["df"].drop(["v0","y", "Z0", "Z1"], axis=1)[9995:],

method_params={})

print(metalearner_estimate)

print("True causal estimate is", data_experiment["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W0,W3,W4,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W4,W2,W1,U) = P(y|v0,W0,W3,W4,W2,W1)

## Realized estimand

b: y~v0+X1+X4+X2+X3+X0+W0+W3+W4+W2+W1

Target units: Data subset provided as a data frame

## Estimate

Mean value: 17.362551183099747

Effect estimates: [[13.92373289]

[14.19171774]

[23.95414995]

[17.57187607]

[17.17127926]]

True causal estimate is 12.180194317871324

Refuting the estimate#

Adding a random common cause variable#

[20]:

res_random=model.refute_estimate(identified_estimand, dml_estimate, method_name="random_common_cause")

print(res_random)

Refute: Add a random common cause

Estimated effect:12.115712153502965

New effect:12.109461442057952

p value:0.84

Adding an unobserved common cause variable#

[21]:

res_unobserved=model.refute_estimate(identified_estimand, dml_estimate, method_name="add_unobserved_common_cause",

confounders_effect_on_treatment="linear", confounders_effect_on_outcome="linear",

effect_strength_on_treatment=0.01, effect_strength_on_outcome=0.02)

print(res_unobserved)

Refute: Add an Unobserved Common Cause

Estimated effect:12.115712153502965

New effect:12.111363897149426

Replacing treatment with a random (placebo) variable#

[22]:

res_placebo=model.refute_estimate(identified_estimand, dml_estimate,

method_name="placebo_treatment_refuter", placebo_type="permute",

num_simulations=10 # at least 100 is good, setting to 10 for speed

)

print(res_placebo)

Refute: Use a Placebo Treatment

Estimated effect:12.115712153502965

New effect:0.017091367253473128

p value:0.31239668718326236

Removing a random subset of the data#

[23]:

res_subset=model.refute_estimate(identified_estimand, dml_estimate,

method_name="data_subset_refuter", subset_fraction=0.8,

num_simulations=10)

print(res_subset)

Refute: Use a subset of data

Estimated effect:12.115712153502965

New effect:12.115321916658097

p value:0.4968805668234225

More refutation methods to come, especially specific to the CATE estimators.