Conditional Average Treatment Effects (CATE) with DoWhy and EconML

This is an experimental feature where we use EconML methods from DoWhy. Using EconML allows CATE estimation using different methods.

All four steps of causal inference in DoWhy remain the same: model, identify, estimate, and refute. The key difference is that we now call econml methods in the estimation step. There is also a simpler example using linear regression to understand the intuition behind CATE estimators.

All datasets are generated using linear structural equations.

[1]:

%load_ext autoreload

%autoreload 2

[2]:

import numpy as np

import pandas as pd

import logging

import dowhy

from dowhy import CausalModel

import dowhy.datasets

import econml

import warnings

warnings.filterwarnings('ignore')

BETA = 10

[3]:

data = dowhy.datasets.linear_dataset(BETA, num_common_causes=4, num_samples=10000,

num_instruments=2, num_effect_modifiers=2,

num_treatments=1,

treatment_is_binary=False,

num_discrete_common_causes=2,

num_discrete_effect_modifiers=0,

one_hot_encode=False)

df=data['df']

print(df.head())

print("True causal estimate is", data["ate"])

X0 X1 Z0 Z1 W0 W1 W2 W3 v0 \

0 0.680855 -0.081822 0.0 0.363662 -1.457851 0.486804 2 3 21.904925

1 -1.366921 1.606660 1.0 0.505995 0.022552 -0.744353 3 3 36.342609

2 0.448970 -0.942918 0.0 0.790112 0.488717 -0.630550 2 0 13.715890

3 -0.413223 -1.072336 0.0 0.999187 -2.757718 -0.282076 2 0 13.279910

4 -0.133890 0.752749 1.0 0.307046 -0.829915 -1.434104 3 1 21.662710

y

0 282.284051

1 244.554705

2 143.071317

3 78.660784

4 219.933669

True causal estimate is 10.230462989973262

[4]:

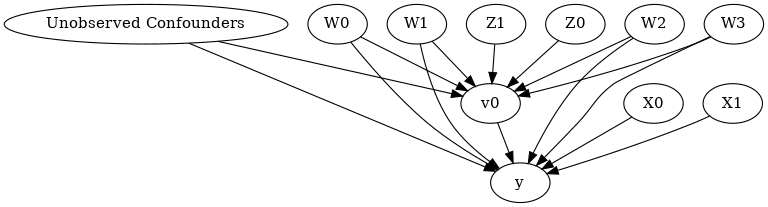

model = CausalModel(data=data["df"],

treatment=data["treatment_name"], outcome=data["outcome_name"],

graph=data["gml_graph"])

[5]:

model.view_model()

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

[6]:

identified_estimand= model.identify_effect(proceed_when_unidentifiable=True)

print(identified_estimand)

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,W1,Z0,W3,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0,U) = P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z0, Z1])*Derivative([v0], [Z0, Z1])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z0,Z1})

Estimand assumption 2, Exclusion: If we remove {Z0,Z1}→{v0}, then ¬({Z0,Z1}→y)

### Estimand : 3

Estimand name: frontdoor

No such variable found!

Linear Model

First, let us build some intuition using a linear model for estimating CATE. The effect modifiers (that lead to a heterogeneous treatment effect) can be modeled as interaction terms with the treatment. Thus, their value modulates the effect of treatment.

Below the estimated effect of changing treatment from 0 to 1.

[7]:

linear_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.linear_regression",

control_value=0,

treatment_value=1)

print(linear_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,W1,Z0,W3,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0,U) = P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0)

## Realized estimand

b: y~v0+Z1+X1+W1+Z0+W3+W2+W0+X0

Target units: ate

## Estimate

Mean value: 10.690211742892949

EconML methods

We now move to the more advanced methods from the EconML package for estimating CATE.

First, let us look at the double machine learning estimator. Method_name corresponds to the fully qualified name of the class that we want to use. For double ML, it is “econml.dml.DML”.

Target units defines the units over which the causal estimate is to be computed. This can be a lambda function filter on the original dataframe, a new Pandas dataframe, or a string corresponding to the three main kinds of target units (“ate”, “att” and “atc”). Below we show an example of a lambda function.

Method_params are passed directly to EconML. For details on allowed parameters, refer to the EconML documentation.

[8]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DML",

control_value = 0,

treatment_value = 1,

target_units = lambda df: df["X0"]>1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=False)},

"fit_params":{}})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,W1,Z0,W3,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0,U) = P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0)

## Realized estimand

b: y~v0+Z1+X1+W1+Z0+W3+W2+W0+X0 |

Target units: Data subset defined by a function

## Estimate

Mean value: 12.12120239567344

Effect estimates: [12.1212024 12.1212024 12.1212024 ... 12.1212024 12.1212024 12.1212024]

[9]:

print("True causal estimate is", data["ate"])

True causal estimate is 10.230462989973262

[10]:

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DML",

control_value = 0,

treatment_value = 1,

target_units = 1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,W1,Z0,W3,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0,U) = P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0)

## Realized estimand

b: y~v0+Z1+X1+W1+Z0+W3+W2+W0+X0 |

Target units:

## Estimate

Mean value: 11.64277316710624

Effect estimates: [11.64277317 11.64277317 11.64277317 ... 11.64277317 11.64277317

11.64277317]

CATE Object and Confidence Intervals

EconML provides its own methods to compute confidence intervals. Using BootstrapInference in the example below.

[11]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

from econml.inference import BootstrapInference

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DML",

target_units = "ate",

confidence_intervals=True,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final": LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{

'inference': BootstrapInference(n_bootstrap_samples=100, n_jobs=-1),

}

})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,W1,Z0,W3,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0,U) = P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0)

## Realized estimand

b: y~v0+Z1+X1+W1+Z0+W3+W2+W0+X0 |

Target units: ate

## Estimate

Mean value: 11.884263413815686

Effect estimates: [11.88426341 11.88426341 11.88426341 ... 11.88426341 11.88426341

11.88426341]

95.0% confidence interval: (array([11.81925706, 11.81925706, 11.81925706, ..., 11.81925706,

11.81925706, 11.81925706]), array([12.69017104, 12.69017104, 12.69017104, ..., 12.69017104,

12.69017104, 12.69017104]))

Can provide a new inputs as target units and estimate CATE on them.

[12]:

test_cols= data['effect_modifier_names'] # only need effect modifiers' values

test_arr = [np.random.uniform(0,1, 10) for _ in range(len(test_cols))] # all variables are sampled uniformly, sample of 10

test_df = pd.DataFrame(np.array(test_arr).transpose(), columns=test_cols)

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DML",

target_units = test_df,

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}

})

print(dml_estimate.cate_estimates)

[11.87749573 11.87749573 11.87749573 ... 11.87749573 11.87749573

11.87749573]

Can also retrieve the raw EconML estimator object for any further operations

[13]:

print(dml_estimate._estimator_object)

<econml.dml.dml.DML object at 0x7f16ffc87100>

Works with any EconML method

In addition to double machine learning, below we example analyses using orthogonal forests, DRLearner (bug to fix), and neural network-based instrumental variables.

Binary treatment, Binary outcome

[14]:

data_binary = dowhy.datasets.linear_dataset(BETA, num_common_causes=4, num_samples=10000,

num_instruments=2, num_effect_modifiers=2,

treatment_is_binary=True, outcome_is_binary=True)

# convert boolean values to {0,1} numeric

data_binary['df'].v0 = data_binary['df'].v0.astype(int)

data_binary['df'].y = data_binary['df'].y.astype(int)

print(data_binary['df'])

model_binary = CausalModel(data=data_binary["df"],

treatment=data_binary["treatment_name"], outcome=data_binary["outcome_name"],

graph=data_binary["gml_graph"])

identified_estimand_binary = model_binary.identify_effect(proceed_when_unidentifiable=True)

X0 X1 Z0 Z1 W0 W1 W2 \

0 -0.187533 0.389821 1.0 0.839453 0.940559 1.415391 -0.380913

1 -0.748730 0.671668 0.0 0.475463 0.892004 1.294085 1.611890

2 -0.808333 -0.732631 0.0 0.868789 0.428347 0.675622 0.962348

3 -1.752145 -0.339531 1.0 0.914105 1.286270 -0.819968 1.832863

4 -0.108159 -1.170617 0.0 0.671865 0.987034 0.268190 -0.551639

... ... ... ... ... ... ... ...

9995 -1.499062 0.679497 1.0 0.762626 2.221605 -0.350508 -0.256909

9996 -1.196394 -0.888150 1.0 0.562215 1.147892 -0.895030 2.146514

9997 0.246945 0.305430 1.0 0.942246 -0.310852 0.226676 1.843343

9998 -1.626697 1.613843 0.0 0.646475 -0.423104 2.829836 1.762817

9999 -2.709163 2.278675 1.0 0.743850 0.863928 0.361682 0.568979

W3 v0 y

0 0.813579 1 1

1 0.351258 1 1

2 0.414451 1 1

3 0.184053 1 1

4 -0.152793 1 1

... ... .. ..

9995 -0.506154 1 1

9996 -0.534854 1 1

9997 -0.333092 1 1

9998 0.728452 1 1

9999 0.667374 1 1

[10000 rows x 10 columns]

Using DRLearner estimator

[15]:

from sklearn.linear_model import LogisticRegressionCV

#todo needs binary y

drlearner_estimate = model_binary.estimate_effect(identified_estimand_binary,

method_name="backdoor.econml.drlearner.LinearDRLearner",

confidence_intervals=False,

method_params={"init_params":{

'model_propensity': LogisticRegressionCV(cv=3, solver='lbfgs', multi_class='auto')

},

"fit_params":{}

})

print(drlearner_estimate)

print("True causal estimate is", data_binary["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,W1,Z0,W3,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0,U) = P(y|v0,Z1,X1,W1,Z0,W3,W2,W0,X0)

## Realized estimand

b: y~v0+Z1+X1+W1+Z0+W3+W2+W0+X0 |

Target units: ate

## Estimate

Mean value: 0.6194410343673133

Effect estimates: [0.61944103 0.61944103 0.61944103 ... 0.61944103 0.61944103 0.61944103]

True causal estimate is 0.1553

Instrumental Variable Method

[17]:

import keras

from econml.deepiv import DeepIVEstimator

dims_zx = len(model.get_instruments())+len(model.get_effect_modifiers())

dims_tx = len(model._treatment)+len(model.get_effect_modifiers())

treatment_model = keras.Sequential([keras.layers.Dense(128, activation='relu', input_shape=(dims_zx,)), # sum of dims of Z and X

keras.layers.Dropout(0.17),

keras.layers.Dense(64, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dropout(0.17)])

response_model = keras.Sequential([keras.layers.Dense(128, activation='relu', input_shape=(dims_tx,)), # sum of dims of T and X

keras.layers.Dropout(0.17),

keras.layers.Dense(64, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(1)])

deepiv_estimate = model.estimate_effect(identified_estimand,

method_name="iv.econml.deepiv.DeepIV",

target_units = lambda df: df["X0"]>-1,

confidence_intervals=False,

method_params={"init_params":{'n_components': 10, # Number of gaussians in the mixture density networks

'm': lambda z, x: treatment_model(keras.layers.concatenate([z, x])), # Treatment model,

"h": lambda t, x: response_model(keras.layers.concatenate([t, x])), # Response model

'n_samples': 1, # Number of samples used to estimate the response

'first_stage_options': {'epochs':25},

'second_stage_options': {'epochs':25}

},

"fit_params":{}})

print(deepiv_estimate)

Epoch 1/25

10000/10000 [==============================] - 1s 101us/step - loss: 11.6538

Epoch 2/25

10000/10000 [==============================] - 1s 59us/step - loss: 3.7950

Epoch 3/25

10000/10000 [==============================] - 1s 56us/step - loss: 3.0525

Epoch 4/25

10000/10000 [==============================] - 0s 48us/step - loss: 2.9154

Epoch 5/25

10000/10000 [==============================] - 1s 58us/step - loss: 2.8358

Epoch 6/25

10000/10000 [==============================] - 1s 53us/step - loss: 2.7859

Epoch 7/25

10000/10000 [==============================] - 1s 56us/step - loss: 2.7401

Epoch 8/25

10000/10000 [==============================] - 1s 58us/step - loss: 2.7221

Epoch 9/25

10000/10000 [==============================] - 1s 58us/step - loss: 2.7038

Epoch 10/25

10000/10000 [==============================] - 1s 52us/step - loss: 2.6872

Epoch 11/25

10000/10000 [==============================] - 1s 55us/step - loss: 2.6518

Epoch 12/25

10000/10000 [==============================] - 1s 53us/step - loss: 2.6039

Epoch 13/25

10000/10000 [==============================] - 0s 48us/step - loss: 2.5914

Epoch 14/25

10000/10000 [==============================] - 1s 52us/step - loss: 2.5683

Epoch 15/25

10000/10000 [==============================] - 1s 55us/step - loss: 2.5612

Epoch 16/25

10000/10000 [==============================] - 0s 47us/step - loss: 2.5512

Epoch 17/25

10000/10000 [==============================] - 0s 49us/step - loss: 2.5505

Epoch 18/25

10000/10000 [==============================] - 1s 53us/step - loss: 2.5420

Epoch 19/25

10000/10000 [==============================] - 0s 49us/step - loss: 2.5394

Epoch 20/25

10000/10000 [==============================] - 0s 50us/step - loss: 2.5293

Epoch 21/25

10000/10000 [==============================] - 0s 50us/step - loss: 2.5286

Epoch 22/25

10000/10000 [==============================] - 1s 51us/step - loss: 2.5297

Epoch 23/25

10000/10000 [==============================] - 1s 50us/step - loss: 2.5300

Epoch 24/25

10000/10000 [==============================] - 0s 49us/step - loss: 2.5244

Epoch 25/25

10000/10000 [==============================] - 1s 50us/step - loss: 2.5150

Epoch 1/25

10000/10000 [==============================] - 1s 122us/step - loss: 26619.1314

Epoch 2/25

10000/10000 [==============================] - 1s 66us/step - loss: 12579.7654

Epoch 3/25

10000/10000 [==============================] - 1s 69us/step - loss: 11734.7099

Epoch 4/25

10000/10000 [==============================] - 1s 80us/step - loss: 11379.2674

Epoch 5/25

10000/10000 [==============================] - 1s 70us/step - loss: 11188.0021

Epoch 6/25

10000/10000 [==============================] - 1s 69us/step - loss: 11216.7738

Epoch 7/25

10000/10000 [==============================] - 1s 69us/step - loss: 11229.4640

Epoch 8/25

10000/10000 [==============================] - 1s 68us/step - loss: 11196.7148

Epoch 9/25

10000/10000 [==============================] - 1s 68us/step - loss: 11116.8998

Epoch 10/25

10000/10000 [==============================] - 1s 68us/step - loss: 11170.7726

Epoch 11/25

10000/10000 [==============================] - 1s 67us/step - loss: 10935.9323

Epoch 12/25

10000/10000 [==============================] - 1s 65us/step - loss: 11147.3363

Epoch 13/25

10000/10000 [==============================] - 1s 70us/step - loss: 11035.0295

Epoch 14/25

10000/10000 [==============================] - 1s 65us/step - loss: 11127.9812

Epoch 15/25

10000/10000 [==============================] - 1s 66us/step - loss: 10992.1307

Epoch 16/25

10000/10000 [==============================] - 1s 67us/step - loss: 10733.7403

Epoch 17/25

10000/10000 [==============================] - 1s 75us/step - loss: 11034.8012

Epoch 18/25

10000/10000 [==============================] - 1s 75us/step - loss: 10997.0383

Epoch 19/25

10000/10000 [==============================] - 1s 77us/step - loss: 11014.5592

Epoch 20/25

10000/10000 [==============================] - 1s 80us/step - loss: 10885.7880

Epoch 21/25

10000/10000 [==============================] - 1s 63us/step - loss: 10905.1800

Epoch 22/25

10000/10000 [==============================] - 1s 64us/step - loss: 10852.0827

Epoch 23/25

10000/10000 [==============================] - 1s 63us/step - loss: 10641.3063

Epoch 24/25

10000/10000 [==============================] - 1s 67us/step - loss: 10856.0904

Epoch 25/25

10000/10000 [==============================] - 1s 72us/step - loss: 10879.3091

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z0, Z1])*Derivative([v0], [Z0, Z1])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z0,Z1})

Estimand assumption 2, Exclusion: If we remove {Z0,Z1}→{v0}, then ¬({Z0,Z1}→y)

## Realized estimand

b: y~v0+Z1+X1+W1+Z0+W3+W2+W0+X0 | X1,X0

Target units: Data subset defined by a function

## Estimate

Mean value: 0.716738760471344

Effect estimates: [ 0.84010315 2.239563 -0.25856018 ... 0.10548401 0.7332916

1.9136963 ]

Metalearners

[18]:

data_experiment = dowhy.datasets.linear_dataset(BETA, num_common_causes=5, num_samples=10000,

num_instruments=2, num_effect_modifiers=5,

treatment_is_binary=True, outcome_is_binary=False)

# convert boolean values to {0,1} numeric

data_experiment['df'].v0 = data_experiment['df'].v0.astype(int)

print(data_experiment['df'])

model_experiment = CausalModel(data=data_experiment["df"],

treatment=data_experiment["treatment_name"], outcome=data_experiment["outcome_name"],

graph=data_experiment["gml_graph"])

identified_estimand_experiment = model_experiment.identify_effect(proceed_when_unidentifiable=True)

X0 X1 X2 X3 X4 Z0 Z1 \

0 1.889075 -0.148901 -3.321291 0.527714 0.408656 1.0 0.332018

1 -0.278462 0.502559 0.345945 -0.578400 -0.602006 0.0 0.857228

2 -0.868973 -2.328919 -1.114627 0.268724 -0.375727 1.0 0.172623

3 0.960801 0.437432 -0.439229 -0.478421 -0.482326 0.0 0.346306

4 1.747583 0.397312 -0.002248 -1.231510 0.872643 0.0 0.414153

... ... ... ... ... ... ... ...

9995 0.647206 -0.211020 -0.175888 0.409196 -0.119107 0.0 0.802795

9996 -1.129477 0.329195 -0.385835 -1.099640 -1.171596 0.0 0.979090

9997 2.553457 1.340551 -0.524767 0.153859 -0.121225 0.0 0.605828

9998 -0.658872 1.386591 -2.662249 0.248188 0.063398 0.0 0.999788

9999 -0.476124 0.275236 -0.498938 -0.335998 1.497155 0.0 0.093024

W0 W1 W2 W3 W4 v0 y

0 0.075309 2.149438 0.589466 -0.679515 0.481774 1 17.872428

1 0.332104 0.380258 0.176676 -0.066274 -0.130537 1 11.208160

2 1.347730 0.914570 -0.668152 -0.196757 -0.231634 1 3.795458

3 0.332007 0.577365 2.100847 -0.672990 0.513451 1 20.095006

4 0.716787 0.784172 0.792574 -1.049425 -0.882550 0 3.246151

... ... ... ... ... ... .. ...

9995 -0.366199 0.152741 2.344528 0.286931 1.124170 1 20.967334

9996 1.710311 0.621336 -1.317947 -0.555407 -0.550753 1 3.074311

9997 0.575043 1.996095 -0.348195 0.046330 0.311212 1 31.600315

9998 0.749128 0.608419 1.121918 -0.629710 0.075430 1 7.718591

9999 0.526421 1.659487 0.514313 -0.880840 -1.048985 0 5.398943

[10000 rows x 14 columns]

[19]:

from sklearn.ensemble import RandomForestRegressor

metalearner_estimate = model_experiment.estimate_effect(identified_estimand_experiment,

method_name="backdoor.econml.metalearners.TLearner",

confidence_intervals=False,

method_params={"init_params":{

'models': RandomForestRegressor()

},

"fit_params":{}

})

print(metalearner_estimate)

print("True causal estimate is", data_experiment["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,X4,W1,Z0,X3,W3,X2,W4,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,X4,W1,Z0,X3,W3,X2,W4,W2,W0,X0,U) = P(y|v0,Z1,X1,X4,W1,Z0,X3,W3,X2,W4,W2,W0,X0)

## Realized estimand

b: y~v0+Z1+X1+X4+W1+Z0+X3+W3+X2+W4+W2+W0+X0

Target units: ate

## Estimate

Mean value: 11.281848958239234

Effect estimates: [ 8.24880761 8.85381156 1.98326246 ... 20.4788987 3.77948304

14.93714404]

True causal estimate is 8.619365154692815

Avoiding retraining the estimator

Once an estimator is fitted, it can be reused to estimate effect on different data points. In this case, you can pass fit_estimator=False to estimate_effect. This works for any EconML estimator. We show an example for the T-learner below.

[20]:

# For metalearners, need to provide all the features (except treatmeant and outcome)

metalearner_estimate = model_experiment.estimate_effect(identified_estimand_experiment,

method_name="backdoor.econml.metalearners.TLearner",

confidence_intervals=False,

fit_estimator=False,

target_units=data_experiment["df"].drop(["v0","y"], axis=1)[9995:],

method_params={})

print(metalearner_estimate)

print("True causal estimate is", data_experiment["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|Z1,X1,X4,W1,Z0,X3,W3,X2,W4,W2,W0,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,Z1,X1,X4,W1,Z0,X3,W3,X2,W4,W2,W0,X0,U) = P(y|v0,Z1,X1,X4,W1,Z0,X3,W3,X2,W4,W2,W0,X0)

## Realized estimand

b: y~v0+Z1+X1+X4+W1+Z0+X3+W3+X2+W4+W2+W0+X0

Target units: Data subset provided as a data frame

## Estimate

Mean value: 13.155958458684603

Effect estimates: [14.58240352 11.70222833 15.95011529 10.52888709 13.01615806]

True causal estimate is 8.619365154692815

Refuting the estimate

Adding a random common cause variable

[21]:

res_random=model.refute_estimate(identified_estimand, dml_estimate, method_name="random_common_cause")

print(res_random)

Refute: Add a Random Common Cause

Estimated effect:11.877495728144678

New effect:12.27643164609447

Adding an unobserved common cause variable

[22]:

res_unobserved=model.refute_estimate(identified_estimand, dml_estimate, method_name="add_unobserved_common_cause",

confounders_effect_on_treatment="linear", confounders_effect_on_outcome="linear",

effect_strength_on_treatment=0.01, effect_strength_on_outcome=0.02)

print(res_unobserved)

Refute: Add an Unobserved Common Cause

Estimated effect:11.877495728144678

New effect:11.766787905625023

Replacing treatment with a random (placebo) variable

[23]:

res_placebo=model.refute_estimate(identified_estimand, dml_estimate,

method_name="placebo_treatment_refuter", placebo_type="permute",

num_simulations=10 # at least 100 is good, setting to 10 for speed

)

print(res_placebo)

Refute: Use a Placebo Treatment

Estimated effect:11.877495728144678

New effect:-0.0003870075346435652

p value:0.4908247078335508

Removing a random subset of the data

[24]:

res_subset=model.refute_estimate(identified_estimand, dml_estimate,

method_name="data_subset_refuter", subset_fraction=0.8,

num_simulations=10)

print(res_subset)

Refute: Use a subset of data

Estimated effect:11.877495728144678

New effect:11.924618691290394

p value:0.4286479951555171

More refutation methods to come, especially specific to the CATE estimators.