Lalonde Pandas API Example

by Adam Kelleher

We’ll run through a quick example using the high-level Python API for the DoSampler. The DoSampler is different from most classic causal effect estimators. Instead of estimating statistics under interventions, it aims to provide the generality of Pearlian causal inference. In that context, the joint distribution of the variables under an intervention is the quantity of interest. It’s hard to represent a joint distribution nonparametrically, so instead we provide a sample from that distribution, which we call a “do” sample.

Here, when you specify an outcome, that is the variable you’re sampling under an intervention. We still have to do the usual process of making sure the quantity (the conditional interventional distribution of the outcome) is identifiable. We leverage the familiar components of the rest of the package to do that “under the hood”. You’ll notice some similarity in the kwargs for the DoSampler.

Getting the Data

First, download the data from the LaLonde example.

[1]:

import os, sys

sys.path.append(os.path.abspath("../../../"))

[2]:

from rpy2.robjects import r as R

%load_ext rpy2.ipython

#%R install.packages("Matching")

%R library(Matching)

%R data(lalonde)

%R -o lalonde

lalonde.to_csv("lalonde.csv",index=False)

R[write to console]: Loading required package: MASS

R[write to console]: ##

## Matching (Version 4.9-6, Build Date: 2019-04-07)

## See http://sekhon.berkeley.edu/matching for additional documentation.

## Please cite software as:

## Jasjeet S. Sekhon. 2011. ``Multivariate and Propensity Score Matching

## Software with Automated Balance Optimization: The Matching package for R.''

## Journal of Statistical Software, 42(7): 1-52.

##

[3]:

# the data already loaded in the previous cell. we include the import

# here you so you don't have to keep re-downloading it.

import pandas as pd

lalonde=pd.read_csv("lalonde.csv")

The causal Namespace

We’ve created a “namespace” for pandas.DataFrames containing causal inference methods. You can access it here with lalonde.causal, where lalonde is our pandas.DataFrame, and causal contains all our new methods! These methods are magically loaded into your existing (and future) dataframes when you import dowhy.api.

[4]:

import dowhy.api

Now that we have the causal namespace, lets give it a try!

The do Operation

The key feature here is the do method, which produces a new dataframe replacing the treatment variable with values specified, and the outcome with a sample from the interventional distribution of the outcome. If you don’t specify a value for the treatment, it leaves the treatment untouched:

[5]:

do_df = lalonde.causal.do(x='treat',

outcome='re78',

common_causes=['nodegr', 'black', 'hisp', 'age', 'educ', 'married'],

variable_types={'age': 'c', 'educ':'c', 'black': 'd', 'hisp': 'd',

'married': 'd', 'nodegr': 'd','re78': 'c', 'treat': 'b'})

WARNING:dowhy.causal_model:Causal Graph not provided. DoWhy will construct a graph based on data inputs.

INFO:dowhy.causal_graph:If this is observed data (not from a randomized experiment), there might always be missing confounders. Adding a node named "Unobserved Confounders" to reflect this.

INFO:dowhy.causal_model:Model to find the causal effect of treatment ['treat'] on outcome ['re78']

INFO:dowhy.causal_identifier:Common causes of treatment and outcome:['hisp', 'married', 'educ', 'nodegr', 'U', 'age', 'black']

WARNING:dowhy.causal_identifier:If this is observed data (not from a randomized experiment), there might always be missing confounders. Causal effect cannot be identified perfectly.

WARN: Do you want to continue by ignoring any unobserved confounders? (use proceed_when_unidentifiable=True to disable this prompt) [y/n] y

INFO:dowhy.causal_identifier:Instrumental variables for treatment and outcome:[]

INFO:dowhy.do_sampler:Using WeightingSampler for do sampling.

INFO:dowhy.do_sampler:Caution: do samplers assume iid data.

Notice you get the usual output and prompts about identifiability. This is all dowhy under the hood!

We now have an interventional sample in do_df. It looks very similar to the original dataframe. Compare them:

[6]:

lalonde.head()

[6]:

| age | educ | black | hisp | married | nodegr | re74 | re75 | re78 | u74 | u75 | treat | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 37 | 11 | 1 | 0 | 1 | 1 | 0.0 | 0.0 | 9930.05 | 1 | 1 | 1 |

| 1 | 22 | 9 | 0 | 1 | 0 | 1 | 0.0 | 0.0 | 3595.89 | 1 | 1 | 1 |

| 2 | 30 | 12 | 1 | 0 | 0 | 0 | 0.0 | 0.0 | 24909.50 | 1 | 1 | 1 |

| 3 | 27 | 11 | 1 | 0 | 0 | 1 | 0.0 | 0.0 | 7506.15 | 1 | 1 | 1 |

| 4 | 33 | 8 | 1 | 0 | 0 | 1 | 0.0 | 0.0 | 289.79 | 1 | 1 | 1 |

[7]:

do_df.head()

[7]:

| age | educ | black | hisp | married | nodegr | re74 | re75 | re78 | u74 | u75 | treat | propensity_score | weight | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 28 | 10 | 1 | 0 | 0 | 1 | 0.00 | 2836.510 | 3196.57 | 1 | 0 | 1 | 0.378881 | 2.639348 |

| 1 | 18 | 10 | 1 | 0 | 0 | 1 | 0.00 | 273.553 | 5514.37 | 1 | 0 | 0 | 0.636766 | 1.570436 |

| 2 | 17 | 11 | 1 | 0 | 0 | 1 | 4080.73 | 3796.030 | 0.00 | 0 | 0 | 0 | 0.649913 | 1.538667 |

| 3 | 35 | 10 | 1 | 0 | 0 | 1 | 0.00 | 0.000 | 4666.24 | 1 | 1 | 1 | 0.389989 | 2.564177 |

| 4 | 30 | 11 | 1 | 0 | 1 | 1 | 0.00 | 9311.940 | 3982.80 | 1 | 0 | 0 | 0.579658 | 1.725155 |

Treatment Effect Estimation

We could get a naive estimate before for a treatment effect by doing

[8]:

(lalonde[lalonde['treat'] == 1].mean() - lalonde[lalonde['treat'] == 0].mean())['re78']

[8]:

We can do the same with our new sample from the interventional distribution to get a causal effect estimate

[9]:

(do_df[do_df['treat'] == 1].mean() - do_df[do_df['treat'] == 0].mean())['re78']

[9]:

We could get some rough error bars on the outcome using the normal approximation for a 95% confidence interval, like

[10]:

import numpy as np

1.96*np.sqrt((do_df[do_df['treat'] == 1].var()/len(do_df[do_df['treat'] == 1])) +

(do_df[do_df['treat'] == 0].var()/len(do_df[do_df['treat'] == 0])))['re78']

[10]:

but note that these DO NOT contain propensity score estimation error. For that, a bootstrapping procedure might be more appropriate.

This is just one statistic we can compute from the interventional distribution of 're78'. We can get all of the interventional moments as well, including functions of 're78'. We can leverage the full power of pandas, like

[11]:

do_df['re78'].describe()

[11]:

count 445.000000

mean 5361.635829

std 6456.849676

min 0.000000

25% 0.000000

50% 3881.280000

75% 8061.490000

max 60307.900000

Name: re78, dtype: float64

[12]:

lalonde['re78'].describe()

[12]:

count 445.000000

mean 5300.765138

std 6631.493362

min 0.000000

25% 0.000000

50% 3701.810000

75% 8124.720000

max 60307.900000

Name: re78, dtype: float64

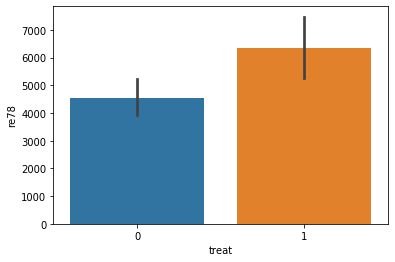

and even plot aggregations, like

[13]:

%matplotlib inline

[14]:

import seaborn as sns

sns.barplot(data=lalonde, x='treat', y='re78')

[14]:

<matplotlib.axes._subplots.AxesSubplot at 0x7f0090dbe470>

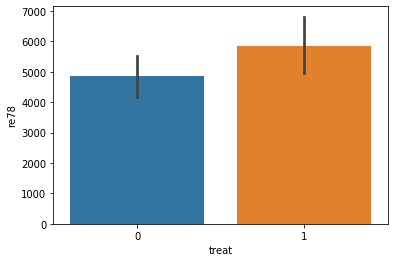

[15]:

sns.barplot(data=do_df, x='treat', y='re78')

[15]:

<matplotlib.axes._subplots.AxesSubplot at 0x7f008e699e80>

Specifying Interventions

You can find the distribution of the outcome under an intervention to set the value of the treatment.

[16]:

do_df = lalonde.causal.do(x={'treat': 1},

outcome='re78',

common_causes=['nodegr', 'black', 'hisp', 'age', 'educ', 'married'],

variable_types={'age': 'c', 'educ':'c', 'black': 'd', 'hisp': 'd',

'married': 'd', 'nodegr': 'd','re78': 'c', 'treat': 'b'})

WARNING:dowhy.causal_model:Causal Graph not provided. DoWhy will construct a graph based on data inputs.

INFO:dowhy.causal_graph:If this is observed data (not from a randomized experiment), there might always be missing confounders. Adding a node named "Unobserved Confounders" to reflect this.

INFO:dowhy.causal_model:Model to find the causal effect of treatment ['treat'] on outcome ['re78']

INFO:dowhy.causal_identifier:Common causes of treatment and outcome:['hisp', 'married', 'educ', 'nodegr', 'U', 'age', 'black']

WARNING:dowhy.causal_identifier:If this is observed data (not from a randomized experiment), there might always be missing confounders. Causal effect cannot be identified perfectly.

WARN: Do you want to continue by ignoring any unobserved confounders? (use proceed_when_unidentifiable=True to disable this prompt) [y/n] y

INFO:dowhy.causal_identifier:Instrumental variables for treatment and outcome:[]

INFO:dowhy.do_sampler:Using WeightingSampler for do sampling.

INFO:dowhy.do_sampler:Caution: do samplers assume iid data.

[17]:

do_df.head()

[17]:

| age | educ | black | hisp | married | nodegr | re74 | re75 | re78 | u74 | u75 | treat | propensity_score | weight | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 37 | 9 | 1 | 0 | 0 | 1 | 0.00 | 0.00 | 1067.51 | 1 | 1 | 1 | 0.405319 | 2.467191 |

| 1 | 21 | 9 | 1 | 0 | 0 | 1 | 0.00 | 0.00 | 0.00 | 1 | 1 | 1 | 0.379743 | 2.633358 |

| 2 | 19 | 10 | 1 | 0 | 0 | 1 | 0.00 | 0.00 | 3228.50 | 1 | 1 | 1 | 0.364787 | 2.741328 |

| 3 | 46 | 8 | 1 | 0 | 0 | 1 | 3165.66 | 2594.72 | 0.00 | 0 | 0 | 1 | 0.432318 | 2.313113 |

| 4 | 25 | 13 | 1 | 0 | 0 | 0 | 12362.90 | 3090.73 | 0.00 | 0 | 0 | 1 | 0.526156 | 1.900578 |

This new dataframe gives the distribution of 're78' when 'treat' is set to 1.

For much more detail on how the do method works, check the docstring:

[18]:

help(lalonde.causal.do)

Help on method do in module dowhy.api.causal_data_frame:

do(x, method='weighting', num_cores=1, variable_types={}, outcome=None, params=None, dot_graph=None, common_causes=None, estimand_type='nonparametric-ate', proceed_when_unidentifiable=False, stateful=False) method of dowhy.api.causal_data_frame.CausalAccessor instance

The do-operation implemented with sampling. This will return a pandas.DataFrame with the outcome

variable(s) replaced with samples from P(Y|do(X=x)).

If the value of `x` is left unspecified (e.g. as a string or list), then the original values of `x` are left in

the DataFrame, and Y is sampled from its respective P(Y|do(x)). If the value of `x` is specified (passed with a

`dict`, where variable names are keys, and values are specified) then the new `DataFrame` will contain the

specified values of `x`.

For some methods, the `variable_types` field must be specified. It should be a `dict`, where the keys are

variable names, and values are 'o' for ordered discrete, 'u' for un-ordered discrete, 'd' for discrete, or 'c'

for continuous.

Inference requires a set of control variables. These can be provided explicitly using `common_causes`, which

contains a list of variable names to control for. These can be provided implicitly by specifying a causal graph

with `dot_graph`, from which they will be chosen using the default identification method.

When the set of control variables can't be identified with the provided assumptions, a prompt will raise to the

user asking whether to proceed. To automatically over-ride the prompt, you can set the flag

`proceed_when_unidentifiable` to `True`.

Some methods build components during inference which are expensive. To retain those components for later

inference (e.g. successive calls to `do` with different values of `x`), you can set the `stateful` flag to `True`.

Be cautious about using the `do` operation statefully. State is set on the namespace, rather than the method, so

can behave unpredictably. To reset the namespace and run statelessly again, you can call the `reset` method.

:param x: str, list, dict: The causal state on which to intervene, and (optional) its interventional value(s).

:param method: The inference method to use with the sampler. Currently, `'mcmc'`, `'weighting'`, and

`'kernel_density'` are supported. The `mcmc` sampler requires `pymc3>=3.7`.

:param num_cores: int: if the inference method only supports sampling a point at a time, this will parallelize

sampling.

:param variable_types: dict: The dictionary containing the variable types. Must contain the union of the causal

state, control variables, and the outcome.

:param outcome: str: The outcome variable.

:param params: dict: extra parameters to set as attributes on the sampler object

:param dot_graph: str: A string specifying the causal graph.

:param common_causes: list: A list of strings containing the variable names to control for.

:param estimand_type: str: 'nonparametric-ate' is the only one currently supported. Others may be added later, to allow for specific, parametric estimands.

:param proceed_when_unidentifiable: bool: A flag to over-ride user prompts to proceed when effects aren't

identifiable with the assumptions provided.

:param stateful: bool: Whether to retain state. By default, the do operation is stateless.

:return: pandas.DataFrame: A DataFrame containing the sampled outcome