Getting started with DoWhy: A simple example

This is a quick introduction to the DoWhy causal inference library. We will load in a sample dataset and estimate the causal effect of a (pre-specified)treatment variable on a (pre-specified) outcome variable.

First, let us load all required packages.

[1]:

import numpy as np

import pandas as pd

import dowhy

from dowhy import CausalModel

import dowhy.datasets

# Avoid printing dataconversion warnings from sklearn

import warnings

from sklearn.exceptions import DataConversionWarning

warnings.filterwarnings(action='ignore', category=DataConversionWarning)

# Config dict to set the logging level

import logging.config

DEFAULT_LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'loggers': {

'': {

'level': 'WARN',

},

}

}

logging.config.dictConfig(DEFAULT_LOGGING)

Now, let us load a dataset. For simplicity, we simulate a dataset with linear relationships between common causes and treatment, and common causes and outcome.

Beta is the true causal effect.

[2]:

data = dowhy.datasets.linear_dataset(beta=10,

num_common_causes=5,

num_instruments = 2,

num_effect_modifiers=1,

num_samples=20000,

treatment_is_binary=True,

num_discrete_common_causes=1)

df = data["df"]

print(df.head())

print(data["dot_graph"])

print("\n")

print(data["gml_graph"])

X0 Z0 Z1 W0 W1 W2 W3 W4 v0 \

0 -1.030109 0.0 0.779284 -0.394498 -0.385477 -0.367360 0.645206 3 True

1 -2.030467 0.0 0.535372 -1.006139 -0.926995 -0.138417 -1.484328 0 False

2 -1.586061 0.0 0.913209 -0.759918 -0.338319 1.081894 2.015009 1 True

3 -0.252026 0.0 0.240364 0.289508 -1.560852 2.178684 1.255095 2 True

4 -0.202098 1.0 0.455254 0.713760 -0.968544 0.454407 -0.410060 0 True

y

0 13.574042

1 -14.557342

2 14.659045

3 13.701713

4 7.247772

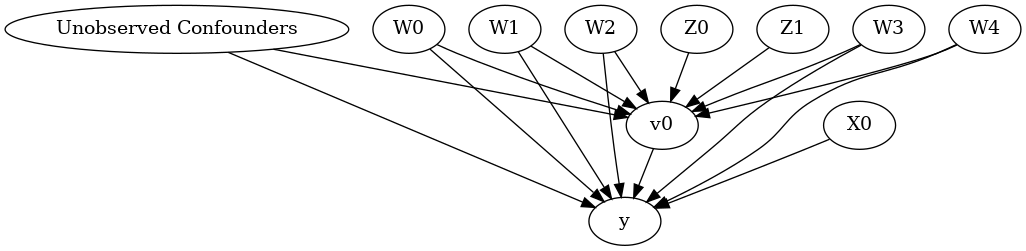

digraph { U[label="Unobserved Confounders"]; U->y;v0->y;U->v0;W0-> v0; W1-> v0; W2-> v0; W3-> v0; W4-> v0;Z0-> v0; Z1-> v0;W0-> y; W1-> y; W2-> y; W3-> y; W4-> y;X0-> y;}

graph[directed 1node[ id "y" label "y"]node[ id "Unobserved Confounders" label "Unobserved Confounders"]edge[source "Unobserved Confounders" target "y"]node[ id "W0" label "W0"] node[ id "W1" label "W1"] node[ id "W2" label "W2"] node[ id "W3" label "W3"] node[ id "W4" label "W4"]node[ id "Z0" label "Z0"] node[ id "Z1" label "Z1"]node[ id "v0" label "v0"]edge[source "Unobserved Confounders" target "v0"]edge[source "v0" target "y"]edge[ source "W0" target "v0"] edge[ source "W1" target "v0"] edge[ source "W2" target "v0"] edge[ source "W3" target "v0"] edge[ source "W4" target "v0"]edge[ source "Z0" target "v0"] edge[ source "Z1" target "v0"]edge[ source "W0" target "y"] edge[ source "W1" target "y"] edge[ source "W2" target "y"] edge[ source "W3" target "y"] edge[ source "W4" target "y"]node[ id "X0" label "X0"] edge[ source "X0" target "y"]]

Note that we are using a pandas dataframe to load the data. At present, DoWhy only supports pandas dataframe as input.

Interface 1 (recommended): Input causal graph

We now input a causal graph in the GML graph format (recommended). You can also use the DOT format.

To create the causal graph for your dataset, you can use a tool like DAGitty that provides a GUI to construct the graph. You can export the graph string that it generates. The graph string is very close to the DOT format: just rename dag to digraph, remove newlines and add a semicolon after every line, to convert it to the DOT format and input to DoWhy.

[3]:

# With graph

model=CausalModel(

data = df,

treatment=data["treatment_name"],

outcome=data["outcome_name"],

graph=data["gml_graph"]

)

[4]:

model.view_model()

[5]:

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

The above causal graph shows the assumptions encoded in the causal model. We can now use this graph to first identify the causal effect (go from a causal estimand to a probability expression), and then estimate the causal effect.

DoWhy philosophy: Keep identification and estimation separate

Identification can be achieved without access to the data, acccesing only the graph. This results in an expression to be computed. This expression can then be evaluated using the available data in the estimation step. It is important to understand that these are orthogonal steps.

Identification

[6]:

identified_estimand = model.identify_effect(proceed_when_unidentifiable=True)

print(identified_estimand)

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W3,W1,W4,W0,W2,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W1,W4,W0,W2,X0,U) = P(y|v0,W3,W1,W4,W0,W2,X0)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z1, Z0])*Derivative([v0], [Z1, Z0])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

### Estimand : 3

Estimand name: frontdoor

No such variable found!

Note the parameter flag proceed_when_unidentifiable. It needs to be set to True to convey the assumption that we are ignoring any unobserved confounding. The default behavior is to prompt the user to double-check that the unobserved confounders can be ignored.

Estimation

[7]:

causal_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.propensity_score_stratification")

print(causal_estimate)

print("Causal Estimate is " + str(causal_estimate.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W3,W1,W4,W0,W2,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W1,W4,W0,W2,X0,U) = P(y|v0,W3,W1,W4,W0,W2,X0)

## Realized estimand

b: y~v0+W3+W1+W4+W0+W2+X0

Target units: ate

## Estimate

Mean value: 9.566139500556192

Causal Estimate is 9.566139500556192

You can input additional parameters to the estimate_effect method. For instance, to estimate the effect on any subset of the units, you can specify the “target_units” parameter which can be a string (“ate”, “att”, or “atc”), lambda function that filters rows of the data frame, or a new dataframe on which to compute the effect. You can also specify “effect modifiers” to estimate heterogeneous effects across these variables. See help(CausalModel.estimate_effect).

[8]:

# Causal effect on the control group (ATC)

causal_estimate_att = model.estimate_effect(identified_estimand,

method_name="backdoor.propensity_score_stratification",

target_units = "atc")

print(causal_estimate_att)

print("Causal Estimate is " + str(causal_estimate_att.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W3,W1,W4,W0,W2,X0))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W1,W4,W0,W2,X0,U) = P(y|v0,W3,W1,W4,W0,W2,X0)

## Realized estimand

b: y~v0+W3+W1+W4+W0+W2+X0

Target units: atc

## Estimate

Mean value: 9.573808685737262

Causal Estimate is 9.573808685737262

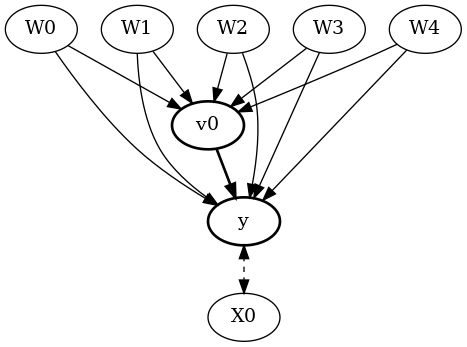

Interface 2: Specify common causes and instruments

[9]:

# Without graph

model= CausalModel(

data=df,

treatment=data["treatment_name"],

outcome=data["outcome_name"],

common_causes=data["common_causes_names"],

effect_modifiers=data["effect_modifier_names"])

[10]:

model.view_model()

[11]:

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

We get the same causal graph. Now identification and estimation is done as before.

[12]:

identified_estimand = model.identify_effect(proceed_when_unidentifiable=True)

Estimation

[13]:

estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.propensity_score_stratification")

print(estimate)

print("Causal Estimate is " + str(estimate.value))

*** Causal Estimate ***

## Identified estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W0,W3,W1,W2,W4))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W0,W3,W1,W2,W4,U) = P(y|v0,W0,W3,W1,W2,W4)

## Realized estimand

b: y~v0+W0+W3+W1+W2+W4

Target units: ate

## Estimate

Mean value: 9.60353896239299

Causal Estimate is 9.60353896239299

Refuting the estimate

Let us now look at ways of refuting the estimate obtained.

Adding a random common cause variable

[14]:

res_random=model.refute_estimate(identified_estimand, estimate, method_name="random_common_cause")

print(res_random)

Refute: Add a Random Common Cause

Estimated effect:9.60353896239299

New effect:9.602995259163794

Adding an unobserved common cause variable

[15]:

res_unobserved=model.refute_estimate(identified_estimand, estimate, method_name="add_unobserved_common_cause",

confounders_effect_on_treatment="binary_flip", confounders_effect_on_outcome="linear",

effect_strength_on_treatment=0.01, effect_strength_on_outcome=0.02)

print(res_unobserved)

Refute: Add an Unobserved Common Cause

Estimated effect:9.60353896239299

New effect:7.591550789699005

Replacing treatment with a random (placebo) variable

[16]:

res_placebo=model.refute_estimate(identified_estimand, estimate,

method_name="placebo_treatment_refuter", placebo_type="permute")

print(res_placebo)

Refute: Use a Placebo Treatment

Estimated effect:9.60353896239299

New effect:0.005166426876598622

p value:0.47

Removing a random subset of the data

[17]:

res_subset=model.refute_estimate(identified_estimand, estimate,

method_name="data_subset_refuter", subset_fraction=0.9)

print(res_subset)

Refute: Use a subset of data

Estimated effect:9.60353896239299

New effect:9.6152898407909

p value:0.42

As you can see, the propensity score stratification estimator is reasonably robust to refutations. For reproducibility, you can add a parameter “random_seed” to any refutation method, as shown below.

[18]:

res_subset=model.refute_estimate(identified_estimand, estimate,

method_name="data_subset_refuter", subset_fraction=0.9, random_seed = 1)

print(res_subset)

Refute: Use a subset of data

Estimated effect:9.60353896239299

New effect:9.618271557173077

p value:0.33