Conditional Average Treatment Effects (CATE) with DoWhy and EconML

This is an experimental feature where we use EconML methods from DoWhy. Using EconML allows CATE estimation using different methods.

All four steps of causal inference in DoWhy remain the same: model, identify, estimate, and refute. The key difference is that we now call econml methods in the estimation step. There is also a simpler example using linear regression to understand the intuition behind CATE estimators.

[1]:

import os, sys

sys.path.insert(1, os.path.abspath("../../../")) # for dowhy source code

[2]:

import numpy as np

import pandas as pd

import logging

import dowhy

from dowhy import CausalModel

import dowhy.datasets

import econml

import warnings

warnings.filterwarnings('ignore')

[3]:

data = dowhy.datasets.linear_dataset(10, num_common_causes=4, num_samples=10000,

num_instruments=2, num_effect_modifiers=2,

num_treatments=1,

treatment_is_binary=False,

num_discrete_common_causes=2,

num_discrete_effect_modifiers=0,

one_hot_encode=False)

df=data['df']

df.head()

[3]:

| X0 | X1 | Z0 | Z1 | W0 | W1 | W2 | W3 | v0 | y | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.279018 | -0.955066 | 0.0 | 0.228005 | 2.128748 | -0.064190 | 2 | 1 | 14.761720 | 122.169174 |

| 1 | -0.284234 | 1.382251 | 1.0 | 0.396490 | 0.175366 | -0.115139 | 1 | 2 | 23.566119 | 345.906350 |

| 2 | 1.411501 | 2.756870 | 0.0 | 0.765155 | 0.649999 | 0.861213 | 2 | 3 | 24.094062 | 477.367085 |

| 3 | -0.149004 | 1.887996 | 0.0 | 0.484696 | 0.693805 | 0.009433 | 0 | 0 | 7.781959 | 124.409057 |

| 4 | -1.033017 | 1.886337 | 0.0 | 0.989248 | -1.287000 | -0.845054 | 3 | 2 | 21.967755 | 356.001269 |

[4]:

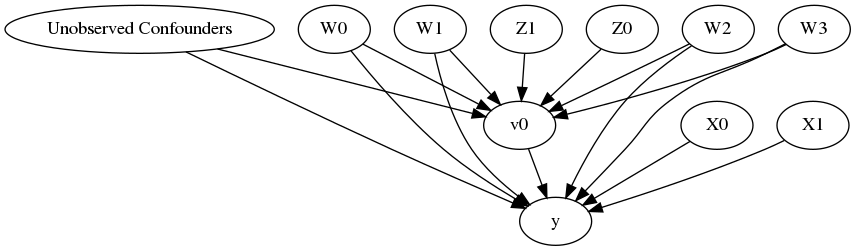

model = CausalModel(data=data["df"],

treatment=data["treatment_name"], outcome=data["outcome_name"],

graph=data["gml_graph"])

INFO:dowhy.causal_model:Model to find the causal effect of treatment ['v0'] on outcome ['y']

[5]:

model.view_model()

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

[6]:

identified_estimand= model.identify_effect()

print(identified_estimand)

INFO:dowhy.causal_identifier:Common causes of treatment and outcome:['W2', 'Unobserved Confounders', 'W1', 'W0', 'W3']

WARNING:dowhy.causal_identifier:If this is observed data (not from a randomized experiment), there might always be missing confounders. Causal effect cannot be identified perfectly.

WARN: Do you want to continue by ignoring any unobserved confounders? (use proceed_when_unidentifiable=True to disable this prompt) [y/n] y

INFO:dowhy.causal_identifier:Instrumental variables for treatment and outcome:['Z1', 'Z0']

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W2,W1,W0,W3))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W2,W1,W0,W3,U) = P(y|v0,W2,W1,W0,W3)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z1, Z0])*Derivative([v0], [Z1, Z0])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

Linear Model

First, let us build some intuition using a linear model for estimating CATE. The effect modifiers (that lead to a heterogeneous treatment effect) can be modeled as interaction terms with the treatment. Thus, their value modulates the effect of treatment.

Below the estimated effect of changing treatment from 0 to 1.

[7]:

linear_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.linear_regression",

control_value=0,

treatment_value=1)

print(linear_estimate)

INFO:dowhy.causal_estimator:INFO: Using Linear Regression Estimator

INFO:dowhy.causal_estimator:b: y~v0+W2+W1+W0+W3+v0*X0+v0*X1

*** Causal Estimate ***

## Target estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W2,W1,W0,W3))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W2,W1,W0,W3,U) = P(y|v0,W2,W1,W0,W3)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z1, Z0])*Derivative([v0], [Z1, Z0])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

## Realized estimand

b: y~v0+W2+W1+W0+W3+v0*X0+v0*X1

## Estimate

Value: 9.999999999999979

EconML methods

We now move to the more advanced methods from the EconML package for estimating CATE.

First, let us look at the double machine learning estimator. Method_name corresponds to the fully qualified name of the class that we want to use. For double ML, it is “econml.dml.DMLCateEstimator”.

Target units defines the units over which the causal estimate is to be computed. This can be a lambda function filter on the original dataframe, a new Pandas dataframe, or a string corresponding to the three main kinds of target units (“ate”, “att” and “atc”). Below we show an example of a lambda function.

Method_params are passed directly to EconML. For details on allowed parameters, refer to the EconML documentation.

[8]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DMLCateEstimator",

control_value = 0,

treatment_value = 1,

target_units = lambda df: df["X0"]>1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}})

print(dml_estimate)

INFO:dowhy.causal_estimator:INFO: Using EconML Estimator

INFO:dowhy.causal_estimator:b: y~v0+W2+W1+W0+W3

*** Causal Estimate ***

## Target estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W2,W1,W0,W3))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W2,W1,W0,W3,U) = P(y|v0,W2,W1,W0,W3)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z1, Z0])*Derivative([v0], [Z1, Z0])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

## Realized estimand

b: y~v0+W2+W1+W0+W3

## Estimate

Value: 13.199275668451099

[9]:

print("True causal estimate is", data["ate"])

True causal estimate is 12.855552298854034

[10]:

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DMLCateEstimator",

control_value = 0,

treatment_value = 1,

target_units = 1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}})

print(dml_estimate)

INFO:dowhy.causal_estimator:INFO: Using EconML Estimator

INFO:dowhy.causal_estimator:b: y~v0+W2+W1+W0+W3

*** Causal Estimate ***

## Target estimand

Estimand type: nonparametric-ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(Expectation(y|W2,W1,W0,W3))

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W2,W1,W0,W3,U) = P(y|v0,W2,W1,W0,W3)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, [Z1, Z0])*Derivative([v0], [Z1, Z0])**(-1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

## Realized estimand

b: y~v0+W2+W1+W0+W3

## Estimate

Value: 12.785653600020371

CATE Object and Confidence Intervals

[ ]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DMLCateEstimator",

target_units = lambda df: df["X0"]>1,

confidence_intervals=True,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{

'inference': 'bootstrap',

}

})

print(dml_estimate)

print(dml_estimate.cate_estimates[:10])

print(dml_estimate.effect_intervals)

Can provide a new inputs as target units and estimate CATE on them.

[ ]:

test_cols= data['effect_modifier_names'] # only need effect modifiers' values

test_arr = [np.random.uniform(0,1, 10) for _ in range(len(test_cols))] # all variables are sampled uniformly, sample of 10

test_df = pd.DataFrame(np.array(test_arr).transpose(), columns=test_cols)

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DMLCateEstimator",

target_units = test_df,

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}

})

print(dml_estimate.cate_estimates)

Can also retrieve the raw EconML estimator object for any further operations

[ ]:

print(dml_estimate._estimator_object)

dml_estimate

Works with any EconML method

In addition to double machine learning, below we example analyses using orthogonal forests, DRLearner (bug to fix), and neural network-based instrumental variables.

Continuous treatment, Continuous outcome

[ ]:

from sklearn.linear_model import LogisticRegression

orthoforest_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.ortho_forest.ContinuousTreatmentOrthoForest",

target_units = lambda df: df["X0"]>1,

confidence_intervals=False,

method_params={"init_params":{

'n_trees':2, # not ideal, just as an example to speed up computation

},

"fit_params":{}

})

print(orthoforest_estimate)

Binary treatment, Binary outcome

[ ]:

data_binary = dowhy.datasets.linear_dataset(10, num_common_causes=4, num_samples=10000,

num_instruments=2, num_effect_modifiers=2,

treatment_is_binary=True, outcome_is_binary=True)

# convert boolean values to {0,1} numeric

data_binary['df'].v0 = data_binary['df'].v0.astype(int)

data_binary['df'].y = data_binary['df'].y.astype(int)

print(data_binary['df'])

model_binary = CausalModel(data=data_binary["df"],

treatment=data_binary["treatment_name"], outcome=data_binary["outcome_name"],

graph=data_binary["gml_graph"])

identified_estimand_binary = model_binary.identify_effect(proceed_when_unidentifiable=True)

Using DRLearner estimator

[ ]:

from sklearn.linear_model import LogisticRegressionCV

#todo needs binary y

drlearner_estimate = model_binary.estimate_effect(identified_estimand_binary,

method_name="backdoor.econml.drlearner.LinearDRLearner",

target_units = lambda df: df["X0"]>1,

confidence_intervals=False,

method_params={"init_params":{

'model_propensity': LogisticRegressionCV(cv=3, solver='lbfgs', multi_class='auto')

},

"fit_params":{}

})

print(drlearner_estimate)

Instrumental Variable Method

[ ]:

import keras

from econml.deepiv import DeepIVEstimator

dims_zx = len(model._instruments)+len(model._effect_modifiers)

dims_tx = len(model._treatment)+len(model._effect_modifiers)

treatment_model = keras.Sequential([keras.layers.Dense(128, activation='relu', input_shape=(dims_zx,)), # sum of dims of Z and X

keras.layers.Dropout(0.17),

keras.layers.Dense(64, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dropout(0.17)])

response_model = keras.Sequential([keras.layers.Dense(128, activation='relu', input_shape=(dims_tx,)), # sum of dims of T and X

keras.layers.Dropout(0.17),

keras.layers.Dense(64, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(1)])

deepiv_estimate = model.estimate_effect(identified_estimand,

method_name="iv.econml.deepiv.DeepIVEstimator",

target_units = lambda df: df["X0"]>-1,

confidence_intervals=False,

method_params={"init_params":{'n_components': 10, # Number of gaussians in the mixture density networks

'm': lambda z, x: treatment_model(keras.layers.concatenate([z, x])), # Treatment model,

"h": lambda t, x: response_model(keras.layers.concatenate([t, x])), # Response model

'n_samples': 1, # Number of samples used to estimate the response

'first_stage_options': {'epochs':25},

'second_stage_options': {'epochs':25}

},

"fit_params":{}})

print(deepiv_estimate)

Metalearners

[ ]:

data_experiment = dowhy.datasets.linear_dataset(10, num_common_causes=0, num_samples=10000,

num_instruments=2, num_effect_modifiers=4,

treatment_is_binary=True, outcome_is_binary=True)

# convert boolean values to {0,1} numeric

data_experiment['df'].v0 = data_experiment['df'].v0.astype(int)

data_experiment['df'].y = data_experiment['df'].y.astype(int)

print(data_experiment['df'])

model_experiment = CausalModel(data=data_experiment["df"],

treatment=data_experiment["treatment_name"], outcome=data_experiment["outcome_name"],

graph=data_experiment["gml_graph"])

identified_estimand_experiment = model_experiment.identify_effect(proceed_when_unidentifiable=True)

[ ]:

from sklearn.linear_model import LogisticRegressionCV

metalearner_estimate = model_experiment.estimate_effect(identified_estimand_experiment,

method_name="backdoor.econml.metalearners.TLearner",

target_units = lambda df: df["X0"]>1,

confidence_intervals=False,

method_params={"init_params":{

'models': LogisticRegressionCV(cv=3, solver='lbfgs', multi_class='auto')

},

"fit_params":{}

})

print(metalearner_estimate)

Refuting the estimate

Random

[ ]:

res_random=model.refute_estimate(identified_estimand, dml_estimate, method_name="random_common_cause")

print(res_random)

Adding an unobserved common cause variable

[ ]:

res_unobserved=model.refute_estimate(identified_estimand, dml_estimate, method_name="add_unobserved_common_cause",

confounders_effect_on_treatment="linear", confounders_effect_on_outcome="linear",

effect_strength_on_treatment=0.01, effect_strength_on_outcome=0.02)

print(res_unobserved)

Replacing treatment with a random (placebo) variable

[ ]:

res_placebo=model.refute_estimate(identified_estimand, dml_estimate,

method_name="placebo_treatment_refuter", placebo_type="permute")

print(res_placebo)

Removing a random subset of the data

[ ]:

res_subset=model.refute_estimate(identified_estimand, dml_estimate,

method_name="data_subset_refuter", subset_fraction=0.8)

print(res_subset)

More refutation methods to come, especially specific to the CATE estimators.