Getting started with DoWhy: A simple example

This is a quick introduction to the DoWhy causal inference library. We will load in a sample dataset and estimate the causal effect of a (pre-specified)treatment variable on a (pre-specified) outcome variable.

First, let us add the required path for Python to find the DoWhy code and load all required packages.

[1]:

import os, sys

sys.path.append(os.path.abspath("../../"))

Let’s check the python version.

[2]:

print(sys.version)

3.7.4 (default, Jul 9 2019, 03:52:42)

[GCC 5.4.0 20160609]

[3]:

import numpy as np

import pandas as pd

import dowhy

from dowhy.do_why import CausalModel

import dowhy.datasets

Now, let us load a dataset. For simplicity, we simulate a dataset with linear relationships between common causes and treatment, and common causes and outcome.

Beta is the true causal effect.

[4]:

data = dowhy.datasets.linear_dataset(beta=10,

num_common_causes=5,

num_instruments = 2,

num_samples=10000,

treatment_is_binary=True)

df = data["df"]

print(df.head())

print(data["dot_graph"])

print("\n")

print(data["gml_graph"])

Z0 Z1 X0 X1 X2 X3 X4 v \

0 0.0 0.053555 -1.174258 -0.987573 0.395070 -0.160051 0.264335 0.0

1 0.0 0.832194 0.631449 0.642332 1.227220 -0.364481 1.425717 1.0

2 0.0 0.306234 -0.114428 0.201991 0.023685 0.953880 1.076977 1.0

3 0.0 0.416143 0.427830 1.266550 0.755916 1.557608 0.444620 1.0

4 0.0 0.652794 0.192758 0.532143 -0.683274 -0.571159 0.977457 1.0

y

0 -4.636023

1 23.078269

2 18.155708

3 23.380629

4 12.891050

digraph { v ->y; U[label="Unobserved Confounders"]; U->v; U->y;X0-> v; X1-> v; X2-> v; X3-> v; X4-> v;X0-> y; X1-> y; X2-> y; X3-> y; X4-> y;Z0-> v; Z1-> v;}

graph[directed 1node[ id "v" label "v"]node[ id "y" label "y"]node[ id "Unobserved Confounders" label "Unobserved Confounders"]edge[source "v" target "y"]edge[source "Unobserved Confounders" target "v"]edge[source "Unobserved Confounders" target "y"]node[ id "X0" label "X0"] edge[ source "X0" target "v"] node[ id "X1" label "X1"] edge[ source "X1" target "v"] node[ id "X2" label "X2"] edge[ source "X2" target "v"] node[ id "X3" label "X3"] edge[ source "X3" target "v"] node[ id "X4" label "X4"] edge[ source "X4" target "v"]edge[ source "X0" target "y"] edge[ source "X1" target "y"] edge[ source "X2" target "y"] edge[ source "X3" target "y"] edge[ source "X4" target "y"]node[ id "Z0" label "Z0"] edge[ source "Z0" target "v"] node[ id "Z1" label "Z1"] edge[ source "Z1" target "v"]]

Note that we are using a pandas dataframe to load the data. At present, DoWhy only supports pandas dataframe as input.

Interface 1 (recommended): Input causal graph

We now input a causal graph in the GML graph format (recommended). You can also use the DOT format.

[5]:

# With graph

model=CausalModel(

data = df,

treatment=data["treatment_name"],

outcome=data["outcome_name"],

graph=data["gml_graph"]

)

INFO:dowhy.do_why:Model to find the causal effect of treatment ['v'] on outcome ['y']

[6]:

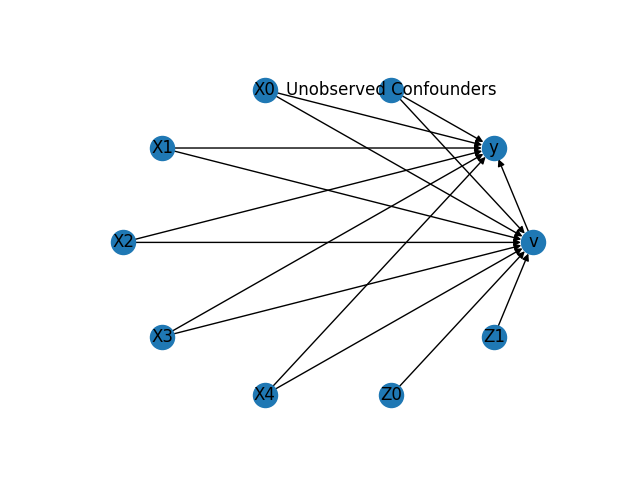

model.view_model()

WARNING:dowhy.causal_graph:Warning: Pygraphviz cannot be loaded. Check that graphviz and pygraphviz are installed.

INFO:dowhy.causal_graph:Using Matplotlib for plotting

/home/amit/virtualenvs/python37/lib/python3.7/site-packages/networkx/drawing/nx_pylab.py:579: MatplotlibDeprecationWarning:

The iterable function was deprecated in Matplotlib 3.1 and will be removed in 3.3. Use np.iterable instead.

if not cb.iterable(width):

/home/amit/virtualenvs/python37/lib/python3.7/site-packages/networkx/drawing/nx_pylab.py:676: MatplotlibDeprecationWarning:

The iterable function was deprecated in Matplotlib 3.1 and will be removed in 3.3. Use np.iterable instead.

if cb.iterable(node_size): # many node sizes

[7]:

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

The above causal graph shows the assumptions encoded in the causal model. We can now use this graph to first identify the causal effect (go from a causal estimand to a probability expression), and then estimate the causal effect.

DoWhy philosophy: Keep identification and estimation separate

Identification can be achieved without access to the data, acccesing only the graph. This results in an expression to be computed. This expression can then be evaluated using the available data in the estimation step. It is important to understand that these are orthogonal steps.

Identification

[8]:

identified_estimand = model.identify_effect()

print(identified_estimand)

INFO:dowhy.causal_identifier:Common causes of treatment and outcome:['X4', 'X1', 'Unobserved Confounders', 'X0', 'X2', 'X3']

WARNING:dowhy.causal_identifier:There are unobserved common causes. Causal effect cannot be identified.

WARN: Do you want to continue by ignoring these unobserved confounders? [y/n] y

INFO:dowhy.causal_identifier:Instrumental variables for treatment and outcome:['Z1', 'Z0']

Estimand type: ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

──(Expectation(y|X4,X1,X0,X2,X3))

dv

Estimand assumption 1, Unconfoundedness: If U→v and U→y then P(y|v,X4,X1,X0,X2,X3,U) = P(y|v,X4,X1,X0,X2,X3)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, Z1)/Derivative(v, Z1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→Z1,Z0)

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→v, then ¬(Z1,Z0→y)

If you want to disable the warning for ignoring unobserved confounders, you can add a parameter flag ( proceed_when_unidentifiable ). The same parameter can also be added when instantiating the CausalModel object.

[9]:

identified_estimand = model.identify_effect(proceed_when_unidentifiable=True)

print(identified_estimand)

INFO:dowhy.causal_identifier:Common causes of treatment and outcome:['X4', 'X1', 'Unobserved Confounders', 'X0', 'X2', 'X3']

INFO:dowhy.causal_identifier:All common causes are observed. Causal effect can be identified.

INFO:dowhy.causal_identifier:Instrumental variables for treatment and outcome:['Z1', 'Z0']

Estimand type: ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

──(Expectation(y|X4,X1,X0,X2,X3))

dv

Estimand assumption 1, Unconfoundedness: If U→v and U→y then P(y|v,X4,X1,X0,X2,X3,U) = P(y|v,X4,X1,X0,X2,X3)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, Z1)/Derivative(v, Z1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→Z1,Z0)

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→v, then ¬(Z1,Z0→y)

Estimation

[10]:

causal_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.linear_regression")

print(causal_estimate)

print("Causal Estimate is " + str(causal_estimate.value))

INFO:dowhy.causal_estimator:INFO: Using Linear Regression Estimator

INFO:dowhy.causal_estimator:b: y~v+X4+X1+X0+X2+X3

*** Causal Estimate ***

## Target estimand

Estimand type: ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

──(Expectation(y|X4,X1,X0,X2,X3))

dv

Estimand assumption 1, Unconfoundedness: If U→v and U→y then P(y|v,X4,X1,X0,X2,X3,U) = P(y|v,X4,X1,X0,X2,X3)

### Estimand : 2

Estimand name: iv

Estimand expression:

Expectation(Derivative(y, Z1)/Derivative(v, Z1))

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→Z1,Z0)

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→v, then ¬(Z1,Z0→y)

## Realized estimand

b: y~v+X4+X1+X0+X2+X3

## Estimate

Value: 9.9999999999994

Causal Estimate is 9.9999999999994

Interface 2: Specify common causes and instruments

[11]:

# Without graph

model= CausalModel(

data=df,

treatment=data["treatment_name"],

outcome=data["outcome_name"],

common_causes=data["common_causes_names"])

WARNING:dowhy.do_why:Causal Graph not provided. DoWhy will construct a graph based on data inputs.

INFO:dowhy.do_why:Model to find the causal effect of treatment ['v'] on outcome ['y']

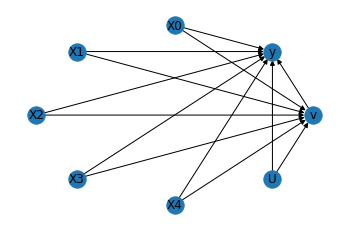

[12]:

model.view_model()

WARNING:dowhy.causal_graph:Warning: Pygraphviz cannot be loaded. Check that graphviz and pygraphviz are installed.

INFO:dowhy.causal_graph:Using Matplotlib for plotting

/home/amit/virtualenvs/python37/lib/python3.7/site-packages/networkx/drawing/nx_pylab.py:579: MatplotlibDeprecationWarning:

The iterable function was deprecated in Matplotlib 3.1 and will be removed in 3.3. Use np.iterable instead.

if not cb.iterable(width):

/home/amit/virtualenvs/python37/lib/python3.7/site-packages/networkx/drawing/nx_pylab.py:676: MatplotlibDeprecationWarning:

The iterable function was deprecated in Matplotlib 3.1 and will be removed in 3.3. Use np.iterable instead.

if cb.iterable(node_size): # many node sizes

We get the same causal graph. Now identification and estimation is done as before.

[13]:

identified_estimand = model.identify_effect()

INFO:dowhy.causal_identifier:Common causes of treatment and outcome:['U', 'X4', 'X1', 'X0', 'X2', 'X3']

WARNING:dowhy.causal_identifier:There are unobserved common causes. Causal effect cannot be identified.

WARN: Do you want to continue by ignoring these unobserved confounders? [y/n] y

INFO:dowhy.causal_identifier:Instrumental variables for treatment and outcome:[]

Estimation

[14]:

estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.propensity_score_matching")

print(estimate)

print("Causal Estimate is " + str(estimate.value))

INFO:dowhy.causal_estimator:INFO: Using Propensity Score Matching Estimator

INFO:dowhy.causal_estimator:b: y~v+X4+X1+X0+X2+X3

*** Causal Estimate ***

## Target estimand

Estimand type: ate

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

──(Expectation(y|X4,X1,X0,X2,X3))

dv

Estimand assumption 1, Unconfoundedness: If U→v and U→y then P(y|v,X4,X1,X0,X2,X3,U) = P(y|v,X4,X1,X0,X2,X3)

### Estimand : 2

Estimand name: iv

No such variable found!

## Realized estimand

b: y~v+X4+X1+X0+X2+X3

## Estimate

Value: 12.4138990048773

Causal Estimate is 12.4138990048773

Refuting the estimate

Let us now look at ways of refuting the estimate obtained.

Adding a random common cause variable

[15]:

res_random=model.refute_estimate(identified_estimand, estimate, method_name="random_common_cause")

print(res_random)

INFO:dowhy.causal_estimator:INFO: Using Propensity Score Matching Estimator

INFO:dowhy.causal_estimator:b: y~v+X4+X1+X0+X2+X3+w_random

Refute: Add a Random Common Cause

Estimated effect:(12.4138990048773,)

New effect:(12.427568331323032,)

Replacing treatment with a random (placebo) variable

[16]:

res_placebo=model.refute_estimate(identified_estimand, estimate,

method_name="placebo_treatment_refuter", placebo_type="permute")

print(res_placebo)

INFO:dowhy.causal_estimator:INFO: Using Propensity Score Matching Estimator

INFO:dowhy.causal_estimator:b: y~placebo+X4+X1+X0+X2+X3

Refute: Use a Placebo Treatment

Estimated effect:(12.4138990048773,)

New effect:(-0.025198898772462442,)

Removing a random subset of the data

[17]:

res_subset=model.refute_estimate(identified_estimand, estimate,

method_name="data_subset_refuter", subset_fraction=0.9)

print(res_subset)

INFO:dowhy.causal_estimator:INFO: Using Propensity Score Matching Estimator

INFO:dowhy.causal_estimator:b: y~v+X4+X1+X0+X2+X3

Refute: Use a subset of data

Estimated effect:(12.4138990048773,)

New effect:(12.379545785678552,)

As you can see, the linear regression estimator is very sensitive to simple refutations.